The Birth of AI Operating Systems

macOS, Ubuntu and Windows are popular operating systems (OS) for desktop users. There are also OSes for mobile phones, cloud servers, etc. Let’s refer to these as “standard OSes”.

With the advent of large language models, such as GPT-4 used by ChatGPT, “AI operating systems” (AI OSes) become possible. In this post, I explain what I mean by this, what an architecture of an AI OS could look like, and where I think the next steps are.

Background

GPT (Generative Pre-trained Transformer) is a large language model (LLM) that is very good at doing one specific task: “finding relevant word sequences (tokens) that follow a particular piece of text.” It is based on the work by Vaswani et al, e.g. Attention Is All You Need. The models are trained on vast amounts of data from many sources and are then fine-tuned to work even better for certain types of tasks, e.g. summarization, translation and answering questions.

While the models are massive (tens of GB), their “context window”, i.e. short-term memory, is comparatively very small. For example, GPT-4 has the maximum context window of 8K “tokens”, which corresponds to ~32K characters or ~6K words in English. This may seem like a lot, but it quickly becomes the limiting factor when doing more complex tasks, e.g. summarizing/generating long text.

LLMs can also be "fine-tuned" (i.e., trained on custom data) to increase their knowledge base. This "fine-tuning" is a handy way to attenuate the previously mentioned token burden by pre-specialising the LLM to perform specific tasks. Unfortunately, at the time of writing, OpenAI does not support fine-tuning GPT-4.

It’s also worth noting that the text generation of these models is still quite slow. For example, a request to the GPT-4 API currently takes on the order of seconds or tens of seconds (depending on the length of its response). This is a very long time compared to the milliseconds or even microseconds it often takes to complete a task on a local machine.

Giving It Wings

By itself, an LLM “only” generates text. However, we can use this ability to:

Give it a goal to perform some high-level tasks.

Tell it that it can use some predefined set of actions to achieve these tasks.

Repeat until the goal is complete:

Ask it to create a plan to achieve the tasks and create a list of actions to run.

Execute the actions on its behalf.

Tell it the results of the actions.

I wrote a proof of concept of the above approach: How I Got ChatGPT to Write Complete Programs. At the same time, similar proofs of concepts were created (e.g. AutoGPT, BabyAGI and my own RoboGPT). These are still experimental and not very reliable attempts at creating (semi-)Autonomous AI Agents (A3), but they show promise in the general approach.

I view these experiments as precursors of well-designed, robust, and extensible AI operating systems that can reliably perform a wide variety of complex tasks.

The AI Operating System

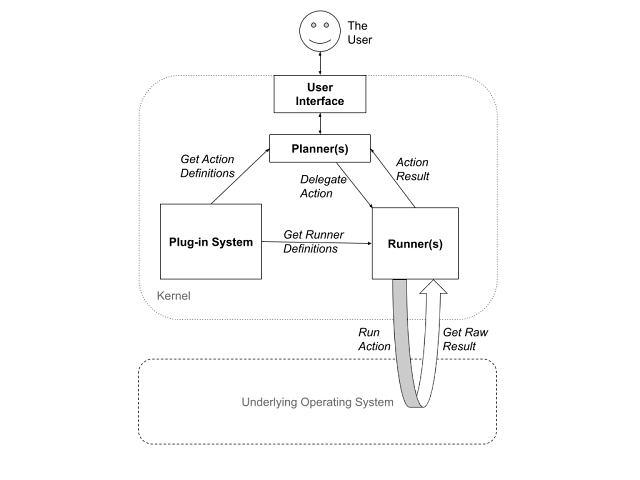

There are still many unknowns, but the main components of an AI Operating System appear to be:

Kernel. Manages short-term and long-term storage, AI and non-AI processes, inter-process communication.

Plug-in System. A system that allows the user to add/remove the capabilities that the system as a whole can do. For example, one could have a “file system” plug-in, which allows the system to perform actions such as “list a directory”, “read a file” and “write a file”.

[Task] Planner(s). This is an AI process that is responsible for evaluating the current goal(s), task(s) and the intermediate results, generating new tasks (if any), and prioritizing the remaining tasks. The key here is to divide and conquer, e.g. a single user-provided goal is continually split into smaller and smaller tasks until all of them can be performed by the available actions.

[Action] Runner(s). Once a task has been reduced to specific actions that the system knows how to execute, the task runner runs these actions and returns their result (which could be a failure).

User Interface (UI). A way for the user to enter the initial goal, to see the intermediate and final result, and (optionally) to interact with the system as it is doing the work — this could include updating the goal, suggesting alternative tasks, confirming proposed actions before they are executed, etc.

A few things to note about the above items:

The plug-in system mentioned in (2) is used to add capabilities to the AI Operating System directly, allowing it to execute different types of actions. The plug-in ecosystem lives outside of any LLM. In comparison, the ChatGPT plug-in system lives within the OpenAI ecosystem and is used to augment the capabilities of ChatGPT itself.

Each plug-in defines the name of each action, what syntax it has (these are instructions for the LLM for how to write the text for the action to run), the parser (this is a small program that converts text written by the LLM to a logical action to run), and the runner spec (this defines what to do when running the action). There is probably a way to use a standard parser generator, in which case the syntax and the parser are replaced with a grammar definition.

The action can itself run an AI process, e.g. to summarize some text. However, this is irrelevant to the overall architecture, since these AI processes should be completely isolated from the Planner(s).

Here is what the high-level AI Operating System architecture could look like:

Key Challenges

There are still many challenges and uncertainties in developing AI Operating Systems.

For example, for the Planner(s) to be able to have sufficient context to make effective decisions, the context window of the LLM(s) used must contain the relevant goal, tasks, available actions and the results so far. This can be particularly challenging when dealing with complex goals.

Another challenge for the Planner(s) is to reliably parse the text of the suggested actions. While LLM(s) have become very good at following specific syntax guidelines, mistakes can still occur. For example, GPT-4 sometimes still has difficulty escaping characters correctly, which is why using JSON to convey code is not ideal.

Most of the actions run by the Runner(s) should be pretty simple. However, some may require expressing both the request and the result in an efficient manner that still conveys sufficient information back to the Planner(s). This may be difficult in some cases.

Final Thoughts

The field of (semi-)Autonomous AI Agents (A3) is evolving fast. In this post, we propose a general architecture for AI Operating Systems that could be used to run these agents. A future post may cover the plug-in system in more detail.

I am currently working on improving RoboGPT based on the architecture proposed in this blog post.

If you have any suggestions on what to try next, or would simply like to stay up to date with my work, follow/DM me on Twitter or LinkedIn.

Thank you for your support and interest in my work!